Movement Research

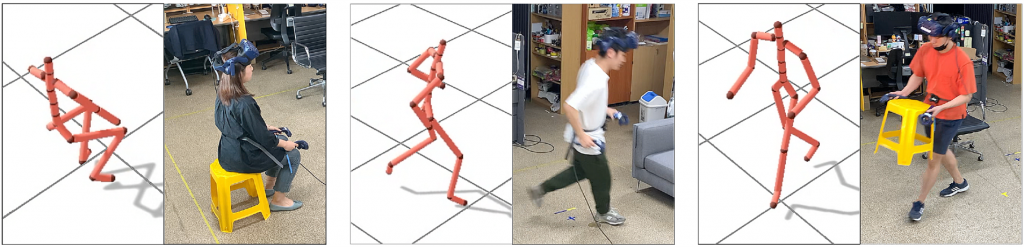

Telepresence Avatar

We investigate AR telepresence avatar technology that allows geographically separated people to meet and communicate through their avatars. Specifically, we are developing novel mothods to enable the avatars sent to a different space to move intelligently to convey the meaning of the users’ movement conducted in the original space. To this end, we analyze and model human behavioral characteristics of interacting with the space, objects, and other people, and develop computational methods to make the avatar reproduce these characteristics.

Recent papers

- Visual Guidance for User Placement in Avatar-Mediated Telepresence between Dissimilar Spaces, D. Yang, J. Kang, T. Kim, and S-H Lee, IEEE Transactions on Visualization and Computer Graphics (TVCG), Jan. 2024 [paper][demo]

- Real-time Retargeting of Deictic Motion to Virtual Avatars for Augmented Reality Telepresence, J. Kang, D. Yang, T. Kim, Y. Lee, and S-H Lee, IEEE ISMAR 2023, Oct. 2023 [project]

- A Mixed Reality Telepresence System for Dissimilar Spaces Using Full-Body Avatar, L. Yoon, D. Yang, C. Chung, and S-H Lee, Proceedings of ACM SIGGRAPH Asia 2020 XR, Dec. 2020 [abstract][paper][demo]

- Placement Retargeting of Virtual Avatars to Dissimilar Indoor Environments, L. Yoon, D. Yang, J. Kim, C. Chung, and S-H Lee, IEEE Transactions on Visualization and Computer Graphics (TVCG), Aug. 2020 (presented at IEEE ISMAR 2020) [project]

- Effects of Locomotion Style and Body Visibility of a Telepresence Avatar, Y. Choi, J. Lee and S-H Lee, IEEE VR, Mar. 2020 [project]

- Multi-Finger Interaction between Remote Users in Avatar-Mediated Telepresence, Y. Lee, S. Lee, and S-H Lee,

CASA 2017, in Computer Animation and Virtual Worlds, 28(3-4), May. 2017 [data] [paper] - Retargeting Human-Object Interaction to Virtual Avatars, Y. Kim, H. Park, S. Bang, and S-H Lee, ISMAR 2016, in IEEE Transactions on Visualization and Computer Graphics, 22(11), pp. 2405-2412, Nov. 2016 [project]

Character Animation

Generating the natural movement of virtual characters is a crucial technology for computer game, animation, VR/AR, and intelligent agents. Major topics are motion editing (including retargeting, in-betweening, and stylization), motion control, and motion planning. Both data-driven and physics-based approaches are explored.

Recent papers

- MOCHA: Real-time Motion Characterization via Context Matching, D-K Jang, Y. Ye, J. Won, and S-H Lee, ACM SIGGRAPH ASIA 2023, Dec. 2023 [project]

- DAFNet: Generating Diverse Actions for Furniture Interaction by Learning Conditional Pose Distribution, T. Jin and S-H Lee, Computer Graphics Forum (Proc. Pacific Graphics), Sep. 2023 [project]

- Motion Puzzle: Arbitrary Motion Style Transfer by Body Part, D-K Jang, S. Park, and S-H Lee, ACM Transactions on Graphics (TOG), Jan. 2022 [project]

- Diverse Motion Stylization for Multiple Style Domains via Spatial-Temporal Graph-Based Generative Model, S. Park, D-K Jang, and S-H Lee, The ACM SIGGRAPH / Eurographics Symposium on Computer Animation (SCA), Jun. 2021

- Constructing Human Motion Manifold with Sequential Networks, D-K Jang and S-H Lee, Computer Graphics Forum, May. 2020 [project]

- Aura Mesh: Motion Retargeting to Preserve the Spatial Relationships between Skinned Characters, T. Jin, M. Kim, and S-H Lee, Computer Graphics Forum ((Proc. Pacific Graphics), May 2018 [project]

- Multi-Contact Locomotion Using a Contact Graph with Feasibility Predictors, C. Kang and S-H Lee, ACM Transactions on Graphics, 36(2), 22:1-14, April, 2017 (to be presented at SIGGRAPH 2017) [project]

Motion Capture and Understanding

We investigate efficient methods to capture and understand human motions with easily accessible sensors, such as VR controllers. We also develop methods to improve the quality of motion capture data.

Recent papers

- DivaTrack: Diverse Bodies and Motions from Acceleration-Enhanced Three-Point Trackers, D. Yang, J. Kang, L. Ma, J. Greer, Y. Ye, and S-H Lee, Computer Graphics Forum (Proc. Eurographics), Apr. 2024 [paper][demo]

- MOVIN: Real-time Motion Capture using a Single LiDAR, D-K Jang*, D. Yang*, D-Y Jang*, B. Choi*, T. Jin, and S-H Lee, Computer Graphics Forum (Proc. Pacific Graphics), Sep. 2023 [project]

- LoBSTr: Real-time Lower-body Pose Prediction from Sparse Upper-body Tracking Signals, D. Yang, D. Kim, and S-H Lee, Computer Graphics Forum (Proc. Eurographics), May. 2021 [project]

- Projective Motion Correction with Contact Optimization, S. Lee and S-H Lee, IEEE Transactions on Visualization and Computer Graphics (TVCG), Mar. 2018 [project]

Digital Human Modeling and Simulation

Digital Fashion: Garment Modeling and Simulation

We are developing deep learning approaches to creating 3D garment models instantly from the images or 3D scans of actual garments worn by the user. Also, we investigate deep learning-based cloth and garment simulation methods aiming to achieve faster run time performance than conventional physics-based simulation even for complex garments.

Recent papers

- NeuralTailor: Reconstructing Sewing Pattern Structures from 3D Point Clouds of Garments, M. Korosteleva and S-H Lee, ACM Transactions on Graphics (TOG), ACM SIGGRAPH 2022, July 2022 [project]

- Generating Datasets of 3D Garments with Sewing Patterns, M. Korosteleva and S-H Lee, NeurIPS (NIPS) Datasets and Benchmarks Track, Sep. 2021

- Estimating Garment Patterns from Static Scan Data, S. Bang, M. Korosteleva, and S-H Lee, Computer Graphics Forum (accepted), May 2021 [project]

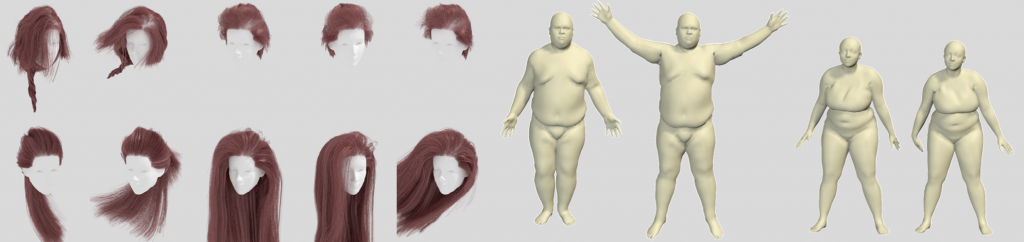

Human Body Modeling and Simulation

Generating realistic human models and their simulation has been one of the key topics in computer graphics research. We aim to develop methods to generate a hyper-realistic avatar that resembles the users’ appearance and their distinctive way of movement. Topics include modeling and simulation of the deformable tissue, muscle and hair.

Recent papers

- Hair Modeling and Simulation by Style, S. Jung and S-H Lee, Eurographics 2018 Conference, in Computer Graphics Forum, 37(2), 355-363, May 2018 [project]

- Regression-Based Landmark Detection on Dynamic Human Models, D-K Jang and S-H Lee, Pacific Graphics 2017 Conference, in Computer Graphics Forum, 36(7), 73-82, Oct. 2017 [project]

- Data-Driven Physics for Human Soft Tissue Animation, M. Kim, G. Pons-Moll, S. Pujades, S. Bang, J. Kim, M. J. Black, and S-H Lee, ACM Transactions on Graphics, (Proc. SIGGRAPH), 36(4), 54:1-12, 2017 [project]

- Realistic Biomechanical Simulation and Control of Human Swimming, W. Si, S-H Lee, E. Sifakis, and D. Terzopoulos, ACM Transactions on Graphics, (presented at ACM SIGGRAPH 2015) 34(1), 10:pp.1-15, Nov. 2014 [project]